Processing Large Numbers of Documents From The Web

Have you ever needed to call a server-side script that processes 1,000s upon 1,000s of documents or rows of data? If you're doing this from a browser you're going to need to take a couple of things in to account:

- Web connections, Agents and scripts can timeout.

- Users need re-assuring feedback that something is actually happening.

If you make a website with a button, which, when clicked, calls a script that loops 10,000 plus document the likelihood is the browser will timeout or the user will get sick of looking at the "Waiting for server..." message, assume it's broken, and refresh the page or close the browser.

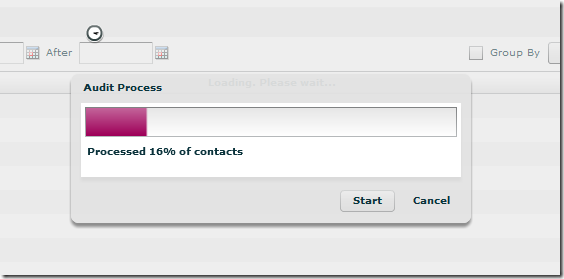

What would be better is having some visual way of showing progress to the user. Couple this with an approach that only processes a set amount of data per execution and you have a safe and user-friendly way of doing it.

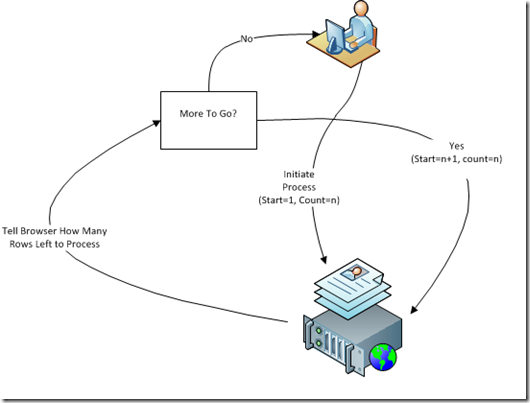

The approach I came up with was to use a round-robin method of calling the back-end script multiple times, processing a set number of document each time, until the server reported back that all were processed.

I'll post a demo and some code examples later this week. For now I'll leave you with a grab of what I ended up with:

As you can probably tell, this is a Flex app. But, it doesn't really matter what technologies you use, either on the front or the backend. The approach can apply to anything.

Is it an approach commonly used though? I can't remember seeing it discussed or used anywhere else, but find it hard to believe I can be the first to discuss it.

Perhaps there are some pitfalls to doing it this way?

An alternative way might be to kick off the process and have it periodically update a temporary document with the current number of documents processed and if there has been an error.

Then have the web side periodically check this document (the original call returned a reference to this document).

It feels slightly better as your batch process will get completed regardless of what the user does allowing for agent timeouts etc. If your hitting agent timeouts during the daytime (which I think by default is 10 minutes) then I wonder if the process should really be running during the day.

If I really need to get around that I would split the batch into individual control documents which a scheduled agent would process and then notify the user via email - the same approach your taking but server side rather than client side. You could still have the Flex app / Javascript within an iframe perform a periodic check on the control document to see if all of the processing has finished - but this way you not reliant on the client being there for the process to complete.

Reply

Interesting. I hadn't thought of that approach.

I guess, as always, it's a case of horses for courses. In the scenario I was dealing with I think my approach might fit better. The process will only happen once a year and it's in the user's interest to make sure they stick around until it's complete.

Reply

Indeed, I've had the same approach as @1 Mark: Schedule the same agent frequently and have it process part of the docs. I usually keep track of where I left off (e.g. in a profile field).

Reply

This is one of many use cases where the memory scopes in XPages come in handy. I often use the JSON-RPC component from the Extension Library for this type of processing: each time the method is called, it checks the viewScope ("view" in the MVC sense, not in the Domino index sense) to see where it left off, processes the next batch, updates the viewScope, then returns a JSON packet indicating how many were processed and how many remain, allowing some visual progress indicator to be updated. Since the server is keeping pointers such as the view entry position in memory, picking up where the last batch left off is fairly efficient. I also find it useful to wrap the client-side portion of this pattern in a configurable object, allowing me to define on a case by case basis how many "chunks" the operation will be divided into... depending upon just how large the volume of processing is estimated to be, in some cases it may make sense to process 10% at a time; in others (e.g. extremely large volumes), 1% at a time, so that the user can see timely progress even though the entire operation may take several minutes.

Reply

What I have done in the past is break the task up into manageable chunks - means more code but better user experience.

1) Run agent to return the number of documents to process

2) Use the return to calculate number of docs in 10%.

3) Call the process agent and pass the number docs to process (10%) as a parameter.

4) Upon completion of the 10% update the visual indicator for the user that 10% is complete and trigger the process agent again with 10% to process.

5) repeat 9 more times

This provides feedback to the user and also cuts down the run-time for each individual agent.

I have to say though Tim's method sounds way cooler!

Reply

Just implemented a small scale version of something similar here. I took the approach to trust only the data at the server, rather than scoped server memory variables (as suggested by others) of some flavor, and used asynchronous client side calls where each response updates the progress indicator. In this case, for now, just a debug console but will later be a progress bar like yours.

The advantage of handling each document as a single request and trusting only the data on the server is that the process can be aborted at any time and neither progress nor data is lost. When the user comes back if they lose connection, the process starts off by asking the server for the unprocessed data as JSON.

Also, as it's asynchronous, other work can continue while this is going on. If I had thousands of documents though, I can see this approach being much slower than yours. I could clump or batch documents with each call but if there were a problem with one document in the batch, it'd be difficult to suss-out where the problem was and where to resume efficiently.... though I suppose flagging failed docs and excluding from the next batch might work too. The other advantage of this approach is it scales *down*, which is often over looked when considering batch processes. I try to make logical units operate on single items and scale up to other management units as needed rather than start big and try to reverse engineer the scaling down as this can cause a lot of rewrites of code and design.

I agree with you, though, it's horses for courses. Each situation has unique requirements to consider... in this case, robustness was desired more-so than speed. Altering this balance would yield a solution similar to yours or Marks.

Reply

The only pitfalls I can foresee, are that you're tying up the browser for X minutes, and what happens if the user's connection is terminated un-expectedly ?

When dealing with thousands of documents that is going to take a considerable amount of time, I usually trigger a scheduled batch job, that is designed to handle a time out, and pick up where it left off. Some have already suggested a "control" document that manages the job, and that's also how I handle it.

But it depends on the requirement, but unless the user is prepared to sit in front of a progress bar for 10+ minutes, it's the ol' batch job management approach. Having an interface they can interactively click on with options to abort the operation if applicable, or simply an email alert showing progress.

I try and avoid tying up the browser/client for any significant amount of time and also avoid any risks associated with the client breaking the connection.

Reply

I've recently been looking into implementing a "Comet" architecture of long polling. It might be overkill for what you are trying to achieve, but it's a very interesting topic.

http://en.wikipedia.org/wiki/Comet_(programming)

Admittedly I've been doing this in ASP.NET/IIS but I wonder if it would be possible todo in Domino ? I remember that there is some kind of http thread limit in Domino ?

Reply

Jake, I noticed your article also appeared on LotusLearns.com http://www.lotuslearns.com/2011/12/processing-large-numbers-of-documents.html today

Reply

Thanks Koen. I thnk I remember seeing that site some time back and being annoyed, but opting to ignore it.

This time I've posted a comment to politely ask they remove all my content.

I tried to report abuse using the Blogger.com link at the top, but you need a "Google Enterprise" account to report copyright issue, which I don't have. Nice.

For now I've played a little trick on them. Take another look at the images on the page you linked to.

Reply

Show the rest of this thread

Using Java, its easy to spawn a thread and return a control ID to the UI. I would then have, say, a jQuery UI progressbar periodically poll the backend for the status of the ID and receive a status update.

Reply